This is free software, MIT licensed, therefore it comes with zero warranty. You will need to learn how to use the matrix. Assuming that the rendered frame camera data (sensor, FoV, ar, ) and position/rotation are known, you could translate that into world space, yes. However, what you want is called translation. Be sure to make a directory specifically for this output, and be patient. My first thought was that you were asking about UV data, which obviously is 2d->3d mapped already. This is a process that takes a fair amount of time and produces lots of images. Rendering animations will render every frame.

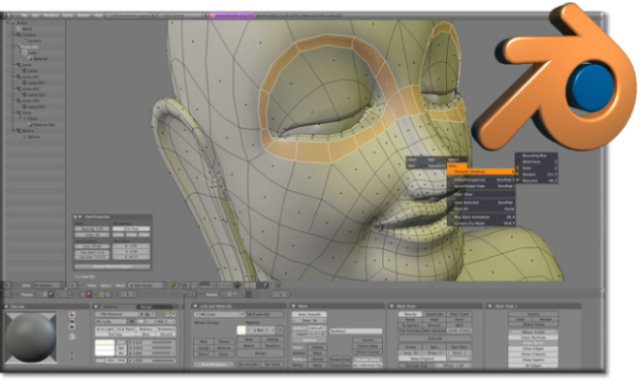

This only supports the EEVEE render engine for now, and works with Blender 2.83 LTS. While you're at it, a motivational star will surely make me want to improve this. If you're not happy about something here, feel free to improve this program by making your own pull request, or if you can't/don't want to program, please open an issue and let me know about it. Tune the parameters in the panel and click on Render to generate your image(s) in the output path you specified. You'll also have to set up your camera and lights before rendering. You'll be able to see a panel under the Tool section, under the Properties window. Go to Edit -> Preferences -> Add-ons -> Install

Add-on for projecting 3D animation to 2D image sheets using Blender How to install the addon

0 kommentar(er)

0 kommentar(er)